musubi-tunner-wan2.2训练镜像

musubi-tunner-wan2.2 lora 训练镜像,内置模型数据集

30

300元/小时

v1.0

musubi wan2.2 训练镜像

- 该镜像已经下载所有模型,并且准备了训练数据集

- musubi 官房地址:https://github.com/kohya-ss/musubi-tuner

- 官方训练文档 https://github.com/kohya-ss/musubi-tuner/blob/main/docs/wan.md

有趣的80后程序员-镜像作者交流群

视频详解

关键步骤说明

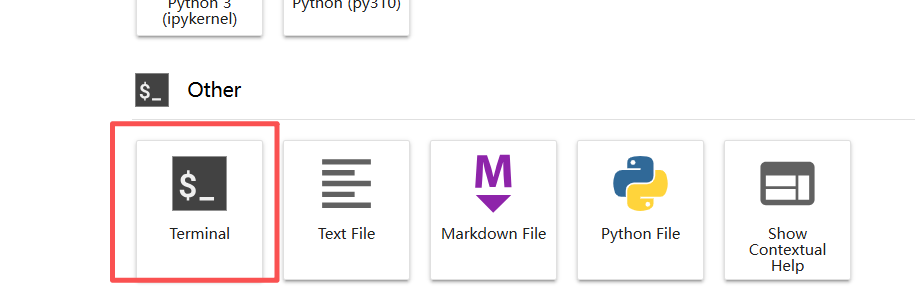

1、打开终端

- 点击jupyterlab点开终端

2、进入musubiTunner主目录

cd /workspace/musubi-tuner/

3、模型下载

- 建议使用huggingface-cli 或 wget 明星进行模型下载

- wan2.2 主模型:https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/tree/main/split_files/diffusion_models

- T5编码器:https://huggingface.co/Wan-AI/Wan2.1-I2V-14B-720P/blob/main/models_t5_umt5-xxl-enc-bf16.pth

- vae: https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/blob/main/split_files/vae/wan_2.1_vae.safetensors

4、数据集前处理

- 图片编码

python src/musubi_tuner/wan_cache_latents.py --dataset_config dataset/cendy_wan2.2.toml \

--vae /workspace/musubi-tuner/models/wan2.2/vae/wan_2.1_vae.safetensors

- 提示词编码

python src/musubi_tuner/wan_cache_text_encoder_outputs.py --dataset_config dataset/cendy_wan2.2.toml \

--t5 models/wan2.2/text_encoders/models_t5_umt5-xxl-enc-bf16.pth --batch_size 16

5、模型训练

- 单独训练低燥模型

accelerate launch --num_cpu_threads_per_process 1 --mixed_precision fp16 src/musubi_tuner/wan_train_network.py \ --task t2v-A14B \ --dit models/wan2.2/diffusion_models/wan2.2_t2v_low_noise_14B_fp16.safetensors \ --dataset_config dataset/cendy_wan2.2.toml --sdpa --mixed_precision fp16 --fp8_base \ --optimizer_type adamw8bit --learning_rate 2e-4 --gradient_checkpointing \ --max_data_loader_n_workers 2 --persistent_data_loader_workers \ --network_module networks.lora_wan --network_dim 32 \ --timestep_sampling shift --discrete_flow_shift 8.0 \ --max_train_epochs 300 --save_every_n_epochs 10 --seed 42 \ --output_dir output --output_name cendy_wan2.2_v1 --blocks_to_swap 35 \ --min_timestep 0 --max_timestep 875 \ --preserve_distribution_shape - 单独训练高燥模型

accelerate launch --num_cpu_threads_per_process 1 --mixed_precision fp16 src/musubi_tuner/wan_train_network.py \ --task t2v-A14B \ --dit models/wan2.2/diffusion_models/wan2.2_t2v_high_noise_14B_fp16.safetensors \ --dataset_config dataset/cendy_wan2.2.toml --sdpa --mixed_precision fp16 --fp8_base \ --optimizer_type adamw8bit --learning_rate 2e-4 --gradient_checkpointing \ --max_data_loader_n_workers 2 --persistent_data_loader_workers \ --network_module networks.lora_wan --network_dim 32 \ --timestep_sampling shift --discrete_flow_shift 8.0 \ --max_train_epochs 300 --save_every_n_epochs 10 --seed 42 \ --output_dir output1 --output_name cendy_wan2.2_v1 --blocks_to_swap 35 \ --min_timestep 875 --max_timestep 1000 \ --preserve_distribution_shape - 同时训练高低燥模型

accelerate launch --num_cpu_threads_per_process 1 --mixed_precision fp16 src/musubi_tuner/wan_train_network.py \ --task t2v-A14B \ --dit models/wan2.2/diffusion_models/wan2.2_t2v_low_noise_14B_fp16.safetensors \ --dit_high_noise models/wan2.2/diffusion_models/wan2.2_t2v_high_noise_14B_fp16.safetensors \ --dataset_config dataset/cendy_wan2.2.toml --sdpa --mixed_precision fp16 --fp8_base \ --optimizer_type adamw8bit --learning_rate 2e-4 --gradient_checkpointing \ --max_data_loader_n_workers 1 --persistent_data_loader_workers \ --network_module networks.lora_wan --network_dim 32 \ --timestep_sampling shift --discrete_flow_shift 8.0 \ --max_train_epochs 300 --save_every_n_epochs 25 --seed 42 \ --output_dir output3 --output_name cendy_wan2.2_v1 --blocks_to_swap 35 \6、模型转化

python src/musubi_tuner/convert_lora.py --input output/cendy_wan2.2_v1.safetensors --output output/output/cendy_wan2.2_low_v1.safetensors --target other

@有趣的80后程序员 认证作者

认证作者

认证作者

认证作者

镜像信息

已使用369 次

运行时长

2304 H

镜像大小

150GB

最后更新时间

2025-10-28

支持卡型

RTX40系20803080Ti309048G RTX40系2080TiH20A800P40A100RTX50系V100S

+12

框架版本

CUDA版本

12.4

应用

JupyterLab: 8888

版本

v1.0

2025-10-28