QwQ-32B-深度思考满血版

QwQ-32B基于Qwen2.5-32B,并通过强化学习(RL)进行进一步优化。

0

00元/小时

v1.0

QwQ-32B-深度思考满血版

镜像简介

- 该版本是满血版本,需要四卡运行!!!Qwen发布了最新的32B推理模型QwQ-32B,其性能在许多基准测试中表现出色,甚至不逊色于671B参数的满血版DeepSeek R1。QwQ-32B基于Qwen2.5-32B,并通过强化学习(RL)进行进一步优化。 本镜像搭载阿里千问最新发布的QwQ-32B“深度思考满血版”大语言模型,专为复杂推理与深度分析任务优化,具备强大的逻辑与多步思考能力。需四张显卡并行运行,适用于代码生成、学术研究、商业分析及高难度对话等对推理质量有严苛要求的专业场景,为拥有高端硬件的用户提供免费、顶级的本地大模型体验。

QWQ-32B镜像快速使用教程

1. 先选择GPU型号,再点击“立即部署” (该镜像建议4卡4090运行)

2. 待实例初始化完成后,在控制台-应用中打开“JupyterLab”

3. 进入Jupyter后,新建一个终端Terminal,输入以下指令

streamlit run /workspace/streamlit_qwq.py --server.address 0.0.0.0 --server.port 11434

4. 运行出现如下结果时,即可在浏览器中访问 http://0.0.0.0:11434 ,其中0.0.0.0替换为外网ip,外网ip可以在控制台-基础网络(外)中获取

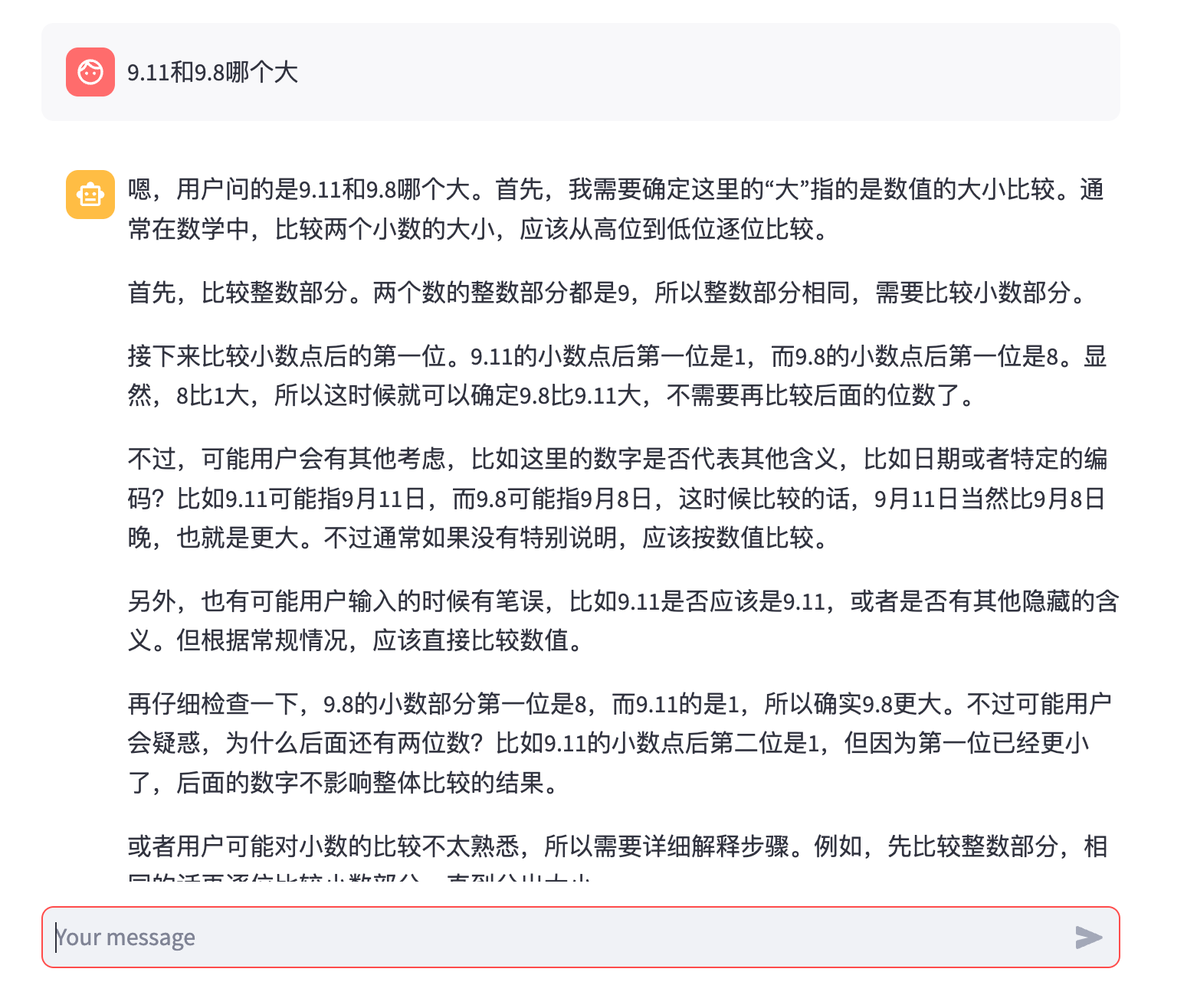

成功进入web界面如下图所示

成功进入web界面如下图所示

开放权重与访问

QwQ-32B模型在Hugging Face和ModelScope上以开放权重形式发布,遵循Apache 2.0许可证,并可通过Qwen Chat访问。

性能评估

QwQ-32B在一系列基准测试中进行了评估,重点关注数学推理、编码能力和一般问题解决能力。以下是QwQ-32B与其他领先模型的性能比较:

使用QwQ-32B

以下是通过Hugging Face Transformers和阿里云DashScope API使用QwQ-32B的示例代码:

用Hugging Face Transformers

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/QwQ-32B"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

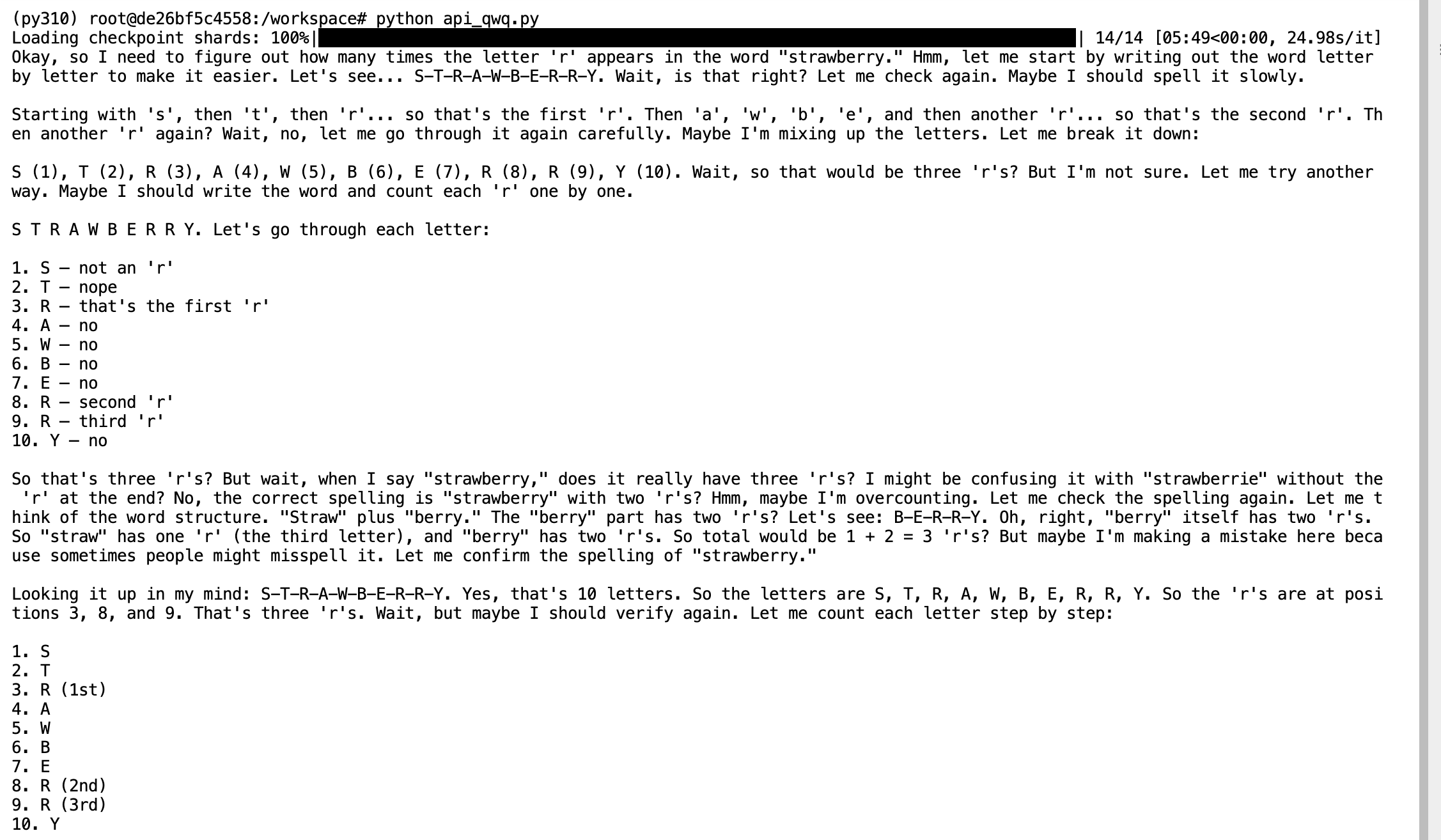

prompt = "How many r's are in the word \"strawberry\""

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=32768

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)

运行python api_qwq.py完成测试

3.2 可视化

以下是一个使用Streamlit构建的QwQ-32B聊天机器人的示例代码,默认提供是非流式的对话平台:

# 导入所需的库

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

import streamlit as st

# 在侧边栏中创建一个标题和一个链接

with st.sidebar:

st.markdown("## Qwen/QwQ-32B LLM")

max_length = st.slider("max_length", 0, 32768, 1024, step=1)

# 创建一个标题和一个副标题

st.title("💬 Qwen/QwQ-32B Chatbot")

st.caption("🚀 A streamlit chatbot powered by Hugging Face Transformers")

# 定义模型路径

model_name = "Qwen/QwQ-32B"

# 定义一个函数,用于获取模型和tokenizer

@st.cache_resource

def get_model():

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

model.eval()

return tokenizer, model

# 加载模型和tokenizer

tokenizer, model = get_model()

if "messages" not in st.session_state:

st.session_state["messages"] = [{"role": "assistant", "content": "有什么可以帮您的?"}]

# 显示聊天记录

for msg in st.session_state.messages:

st.chat_message(msg["role"]).write(msg["content"])

# 处理用户输入

if prompt := st.chat_input():

st.session_state.messages.append({"role": "user", "content": prompt})

st.chat_message("user").write(prompt)

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=max_length

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

st.session_state.messages.append({"role": "assistant", "content": response})

st.chat_message("assistant").write(response)

如果想要流式的对话平台,请替换为下面的代码:

from transformers import AutoTokenizer, AutoModelForCausalLM, TextIteratorStreamer

import torch

import streamlit as st

from threading import Thread

# 在侧边栏中创建一个标题和一个链接

with st.sidebar:

st.markdown("## Qwen/QwQ-32B LLM")

max_length = st.slider("max_length", 0, 32768, 4096, step=1)

# 创建一个标题和一个副标题

st.title("💬 Qwen/QwQ-32B Chatbot")

st.caption("🚀 A streamlit chatbot powered by Hugging Face Transformers")

# 定义模型路径

model_name = "Qwen/QwQ-32B"

# 定义一个函数,用于获取模型和tokenizer

@st.cache_resource

def get_model():

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

model.eval()

return tokenizer, model

# 加载模型和tokenizer

tokenizer, model = get_model()

if "messages" not in st.session_state:

st.session_state.messages = [{"role": "assistant", "content": "有什么可以帮您的?"}]

# 显示聊天记录

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# 定义生成响应的函数

def generate_response(prompt):

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

streamer = TextIteratorStreamer(tokenizer, skip_prompt=True)

generation_kwargs = {

"input_ids": model_inputs['input_ids'].to(model.device),

"streamer": streamer,

"max_new_tokens": max_length, # 可以根据需要调整

"temperature": 0.95,

"top_p": 0.8,

}

# 启动生成线程

thread = Thread(target=model.generate, kwargs=generation_kwargs)

thread.start()

generated_text = ""

for new_text in streamer:

generated_text += new_text

yield generated_text # 流式输出生成的文本

thread.join() # 等待线程完成

# 处理用户输入

if prompt := st.chat_input("请输入问题"):

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

st.markdown(prompt)

with st.chat_message("assistant"):

message_placeholder = st.empty() # 创建一个占位符

for new_text in generate_response(prompt):

message_placeholder.markdown(new_text) # 更新占位符内容

# 将生成的完整内容添加到消息记录中

st.session_state.messages.append({"role": "assistant", "content": new_text})

# 清空按钮

if st.button("清空"):

st.session_state.messages = [{"role": "assistant", "content": "有什么可以帮您的?"}]

st.experimental_rerun()

在终端中运行以下命令启动 streamlit 服务,并按照 compshare 的指示将端口映射到本地,然后在浏览器中打开链接 http://外部链接:11434/,即可看到聊天界面。

streamlit run /workspace/streamlit_qwq.py --server.address 0.0.0.0 --server.port 11434

@敢敢のwings 认证作者

认证作者

认证作者

认证作者

镜像信息

已使用29 次

运行时长

1 H

镜像大小

110GB

最后更新时间

2026-02-04

支持卡型

RTX40系20803080Ti309048G RTX40系2080TiH20A800P40A100RTX50系V100SV100S

+13

框架版本

CUDA版本

12.1

应用

JupyterLab: 8888

版本

v1.0

2026-02-04